Contents

AIs are giving us a kicking in board games and video games, but what else are they good for?

Credit: Facebook AI Research

In recent years, though, our species has created new forms of intelligence, able to outperform us in other ways. One of the most famous of these artificial intelligences (AIs) is AlphaGo, developed by Deepmind. In just a few years, it has learned to play the 4,000-year-old strategy game, Go, beating two of the world’s strongest players.

Other software developed by Deepmind has learned to play classic eight-bit video games, notably Breakout, in which players must use a bat to hit a ball at a wall, knocking bricks out of it. CEO Demis Hassabis is fond of saying that the software figured out how to beat the game purely from the pixels on the screen, often glossing over the fact that the company first taught it how to count and how to read the on-screen score, and gave it the explicit goal of maximizing that score. Even the smartest AIs need a few hints about our social mores.

But what else are AIs good for? Here are five tasks in which they can equal, or surpass, humans.

Building wooden block towers

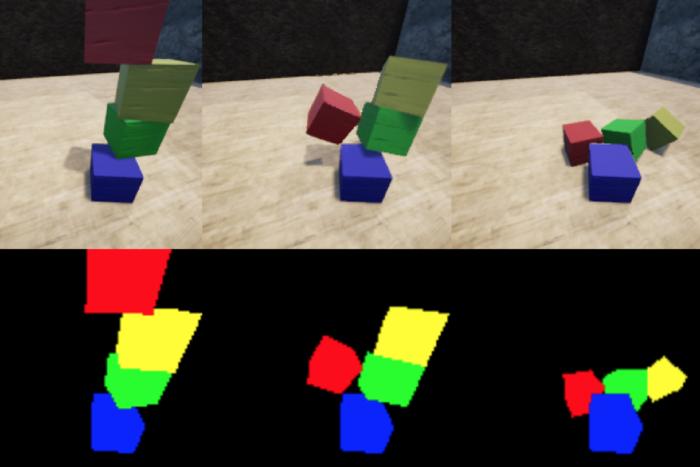

AIs don’t just play video games, they play with traditional toys, too. Like us, they get some of their earliest physics lessons from playing with wooden blocks, piling them up then watching them fall. Researchers at Facebook have built an AI using convolutional neural networks that can attain human-level performance at predicting how towers of blocks will fall, simply by watching films and animations of block towers standing or falling.

Lip-reading

Lip-reading—figuring out what someone is saying from the movement of their lips alone—can be a useful skill if you’re hard of hearing or working in a noisy environment, but it’s notoriously difficult. Much of the information contained in human speech—the position of the teeth and tongue, and whether sounds are voiced or not—is invisible to a lip reader, whether human or AI. Nevertheless, researchers at the University of Oxford, England, have developed a system called LipNet that can lip-read short sentences with a word error rate of 6.6 percent. Three human lip-readers participating in the research had error rates between 35.5 percent and 57.3 percent.

Among the applications the researchers see for their work are silent dictation and speech recognition in noisy environments, where the visual component will add to accuracy. Since the AI’s output is text, it could find work close-captioning TV shows for broadcast networks—or transcribing surveillance video for security services.

Transcribing

AIs can be even more helpful when the audio quality is better, according to Microsoft, where researchers have been tweaking an AI-based automated speech recognition system so it performs as well as, or better than, people. Microsoft’s system now has an error rate of 5.9 percent on one test set from the National Institute of Standards and Technology, the same as a service employing human transcribers that Microsoft hired, and 11.1 percent on another test, narrowly beating the humans, who scored 11.3 percent.

That’s one situation where being better than a human might not be sufficient: If you were thinking you could dictate your next memo, you might find it quicker to type and correct it yourself, even with just two fingers.

News reporting

This story was written by a human, but the next one you read might be written by an AI.

And that might not be a bad thing: MogIA, developed by the Indian company Genic, doesn’t write stories, but when MogIA predicted Donald Trump would win the U.S. presidential election it did better than most political journalists.

When it comes to writing, AI’s are particularly quick at turning structured data into words, as a Financial Times journalist found when pitted against an AI called Emma from Californian startup Stealth.

Emma filed a story on unemployment statistics just 12 minutes after the figures were released, three times faster than the FT’s journalist. While the AI’s copy was clear and accurate, it missed the news angle: the number of jobseekers had risen for the first time in a year. While readers want news fast, it’s not for nothing that journalists are exhorted to “get it first, but first, get it right.”

Putting those employment statistics in context would have required knowledge that Emma apparently didn’t have, but that’s not an insurmountable problem. By reading dictionaries, encyclopedias, novels, plays, and assorted reference materials, another AI, IBM’s Watson, famously learned enough context to win the general knowledge quiz show Jeopardy.

Disease diagnosis

After that victory, Watson went to medical school, absorbing 15 million pages of medical textbooks and academic papers about oncology. According to some reports, that has allowed Watson to diagnose cancer cases that stumped human doctors, although IBM pitches the AI as an aid to human diagnosis, not a replacement for it.

More recently, IBM has put Watson’s ability to absorb huge volumes of information to work helping diagnose rare illnesses, some of which most doctors might see only a few cases of in a lifetime.

Doctors in the Centre for Undiagnosed and Rare Diseases at University Hospital Marburg, in Germany, will use this instance of Watson to help them deal with the thousands of patients referred to them each year, some of them bringing thousands of pages of medical records to be analyzed.

Authors including Vernor Vinge and Ray Kurzweil have postulated that AI technology will develop to a point, still many years off, that they call “the singularity,” when the combined problem-solving capacity of the human race will be overtaken by that of artificial intelligences.

If the singularity arrives in our lifetimes, some of us might be able to thank AIs like Watson for helping keep us alive long enough to see it.