Late on Tuesday night, as it became clear that Donald J. Trump would defeat Hillary Clinton to win the presidential election, a private chat sprang up on Facebook among several vice presidents and executives of the social network.

What role, they asked each other, had their company played in the election’s outcome?

Facebook’s top executives concluded that they should address the issue and assuage staff concerns at a quarterly all-hands meeting. They also called a smaller meeting with the company’s policy team, according to three people who saw the private chat and are familiar with the decisions; they requested anonymity because the discussion was confidential.

More from the New York Times:

Where cellphones are lifelines, start-up spy opportunities

Trump’s hires will set course of his presidency

New Zealand struck by powerful earthquake

Facebook has been in the eye of a postelection storm for the last few days, embroiled in accusations that it helped spread misinformation and fake news stories that influenced how the American electorate voted. The online conversation among Facebook’s executives on Tuesday, which was one of several private message threads that began among the company’s top ranks, showed that the social network was internally questioning what its responsibilities might be.

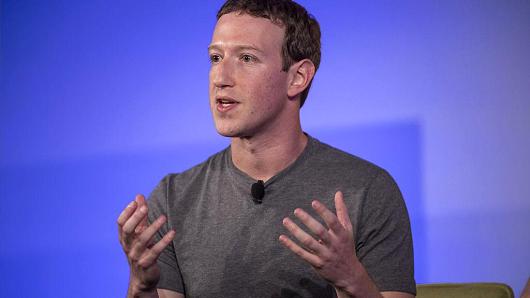

Even as Facebook has outwardly defended itself as a nonpartisan information source — Mr. Zuckerberg said at a conference on Thursday that Facebook affecting the election was “a pretty crazy idea” — many company executives and employees have been asking one another if, or how, they shaped the minds, opinions and votes of Americans.

Some employees are worried about the spread of racist and so-called alt-right memes across the network, according to interviews with 10 current and former Facebook employees. Others are asking whether they contributed to a “filter bubble” among users who largely interact with people who share the same beliefs.

Even more are reassessing Facebook’s role as a media company and wondering how to stop the distribution of false information. Some employees have been galvanized to send suggestions to product managers on how to improve Facebook’s powerful news feed: the streams of status updates, articles, photos and videos that users typically spend the most time interacting with.

“A fake story claiming Pope Francis — actually a refugee advocate — endorsed Mr. Trump was shared almost a million times, likely visible to tens of millions,” Zeynep Tufekci, an associate professor at the University of North Carolina who studies the social impact of technology, said of a recent post on Facebook. “Its correction was barely heard. Of course Facebook had significant influence in this last election’s outcome.”

This image of Facebook as a partisan influencer and distributor of bad information is at odds with how the company views itself, former and current employees said. Chris Cox, a senior vice president of product and one of Mr. Zuckerberg’s top lieutenants, has long described Facebook as an unbiased and blank canvas to give people a voice. Employees and executives genuinely believed they were well-intentioned and acting as a force for good, these people said.

Facebook declined to comment beyond a previously released statement that it was “just one of many ways people received their information — and was one of the many ways people connected with their leaders, engaged in the political process and shared their views.”

On Saturday night, Mr. Zuckerberg posted a lengthy status update to his Facebook page with some of his thoughts on the election.

“Of all the content on Facebook, more than 99% of what people see is authentic. Only a very small amount is fake news and hoaxes,” Mr. Zuckerberg wrote. “Overall, this makes it extremely unlikely hoaxes changed the outcome of this election in one direction or the other.”

He added: “I am confident we can find ways for our community to tell us what content is most meaningful, but I believe we must be extremely cautious about becoming arbiters of truth ourselves.”

The postelection questioning caps a turbulent year for Facebook, during which its power to influence what its 1.79 billion users watch, read and believe has increasingly been criticized. Almost half of American adults rely on Facebook as a source of news, according to a study by the Pew Research Center. And Facebook often emphasizes its ability to sway its users with advertisers, portraying itself as an effective mechanism to help promote their products.

Inside Facebook, employees have become more aware of the company’s role in media after several incidents involving content the social network displayed in users’ news feeds.

In May, the company grappled with accusations that politically biased employees were censoring some conservative stories and websites in Facebook’s Trending Topics section, a part of the site that shows the most talked-about stories and issues on Facebook. Facebook later laid off the Trending Topics team.

In September, Facebook came under fire for removing a Pulitzer Prize-winning photo of a naked 9-year-old girl, Phan Thi Kim Phuc, as she fled napalm bombs during the Vietnam War. The social network took down the photo for violating its nudity standards, even though the picture was an illustration of the horrors of war rather than child pornography.

Both those incidents seemed to worsen a problem of fake news circulating on Facebook. The Trending Topics episode paralyzed Facebook’s willingness to make any serious changes to its products that might compromise the perception of its objectivity, employees said. The “napalm girl” incident reminded many insiders at Facebook of the company’s often tone-deaf approach to nuanced situations.

Throughout, Mr. Zuckerberg has defended Facebook as a place where people can share all opinions. When employees objected in October to the stance of Peter Thiel, a Facebook board member, in supporting Mr. Trump, Mr. Zuckerberg said, “We care deeply about diversity” and reiterated that the social network gave everyone the power to share their experiences.

More recently, issues with fake news on the site have mushroomed. Multiple Facebook employees were particularly disturbed last week when a fake news site called The Denver Guardian spread across the social network with negative and false messages about Mrs. Clinton, including a claim that an F.B.I. agent connected to Mrs. Clinton’s email disclosures had murdered his wife and shot himself.

On Thursday, after a companywide meeting at Facebook, many employees said they were dissatisfied with an address from Mr. Zuckerberg, who offered comments to staff that were similar to what he has said publicly.

Even in private, Mr. Zuckerberg has continued to resist the notion that Facebook can unduly affect how people think and behave. In a Facebook post circulated on Wednesday to a small group of his friends, which was obtained by The New York Times, Mr. Zuckerberg challenged the idea that Facebook had a direct effect on the way people voted.

In the three-paragraph post, the chief executive cited several statistics about low voter turnout during the election.

Then Mr. Zuckerberg wrote: “So rather than focusing on strengths or weaknesses in specific demographics, or other factors that may have pushed this race in one direction or another, these stats clearly suggest what many people have said all along. Both candidates were

source”cnbc”